How to Improve Data Quality and Reproducibility in Label-Free Proteomics?

-

Principal Component Analysis (PCA) to evaluate sample clustering consistency

-

Coefficient of Variation (CV) analysis to identify stable proteins

-

Cross-validation of quantification results with biological plausibility

Label-free Quantitative Proteomics (LFQ) has emerged as a widely adopted approach in biomedical research, owing to its straightforward sample preparation, broad applicability, and relatively low cost. However, challenges related to data quality and reproducibility remain major obstacles that limit the depth and reliability of LFQ applications. In this article, we systematically examine the key factors that influence LFQ data performance and present optimization strategies for each critical stage—experimental design, sample preparation, mass spectrometry acquisition, and data analysis—to support scientists in generating high-quality and reproducible proteomics data.

Main Factors Affecting the Data Quality and Reproducibility of Label-Free Quantitative Proteomics (LFQ)

1. Consistency of Sample Preparation

Sample preparation forms the foundation of proteomics workflows. Even minor variations—such as lysis conditions, accuracy of protein quantification, or enzymatic digestion efficiency—can introduce systematic biases and ultimately compromise quantitative outcomes.

Common issues include:

(1) Incomplete lysis of cells or tissues

(2) Inaccurate estimation of total protein concentration

(3) Incomplete or excessive trypsin digestion

(4) Introduction of contaminants (e.g., keratin)

2. Stability and Parameter Setting of Mass Spectrometer

The success of label-free quantitative proteomics relies heavily on the stable performance of the mass spectrometer over extended acquisition periods. Any drift in retention time, contamination, or fluctuation in ionization efficiency can directly reduce quantification accuracy.

Key factors include:

(1) Contamination of the ion source or mass spectrometer chamber

(2) Retention time drift, especially in long-gradient liquid chromatography

(3) Improper configuration of resolution and scan speed settings

(4) Stochastic variability inherent in data-dependent acquisition (DDA)

3. Standardization of Data Analysis Workflow

Inconsistencies in data processing pipelines—such as variation in database search parameters, differences in algorithms for peak integration, and non-uniform normalization strategies—are major contributors to poor reproducibility in LFQ results.

Practical Strategies to Improve LFQ Data Quality and Reproducibility

1. Standardizing the Sample Preparation Workflow

(1) Use consistent lysis buffers and uniform processing times—such as RIPA or SDS lysis—while maintaining samples on ice to prevent proteolytic degradation.

(2) Ensure accurate protein quantification using the BCA assay, performed in a single batch to reduce technical variation.

(3) Maintain controlled enzymatic digestion conditions, including a standardized protease-to-substrate ratio (e.g., 1:50), temperature (37°C), and duration (16 hours).

(4) Minimize contamination risk by wearing gloves during all procedures and using low-protein-binding labware.

At MtoZ Biolabs, we implement a standardized sample preparation SOP to minimize operator-induced variability and ensure high reproducibility and processing efficiency.

2. Optimizing Mass Spectrometry Maintenance and Acquisition Parameters

(1) Perform routine maintenance and calibration of the mass spectrometer, including regular cleaning of the ion source and timely replacement of gas filters.

(2) Employ a stable nanoLC system and incorporate column washing steps before and after gradient runs to prevent loss of column performance.

(3) Optimize data-dependent acquisition (DDA) settings by fine-tuning the number of selected precursor ions (Top N), thereby avoiding excessive re-selection of high-abundance peptides.

(4) Use real-time system performance monitoring with spiked-in reference peptides, such as indexed Retention Time (iRT) standards.

At MtoZ Biolabs, each sample is subjected to a pre-run system validation using a Thermo Orbitrap Exploris 480 platform, ensuring consistent and reliable mass spectrometry performance.

3. Standardizing Data Processing and Quality Control Procedures

(1) Apply uniform search parameters across all datasets, including fixed precursor mass tolerances, defined enzymatic cleavage rules, and consistent modification settings.

(2) Utilize advanced quantification algorithms, such as the MaxQuant LFQ module, incorporating features like Match Between Runs (MBR) to enhance peptide identification rates.

(3) Conduct multi-level quality assessment, including:

Proteomics data analysis at MtoZ Biolabs is conducted by experienced analysts, leveraging a proprietary multi-dimensional quality control framework to ensure scientific rigor and reproducibility. As a powerful approach in systems biology, label-free quantitative proteomics plays a vital role in elucidating disease mechanisms and identifying potential biomarkers. By exercising stringent control over sample processing, instrument performance, and data analysis, researchers can significantly enhance the quality and reproducibility of LFQ data. MtoZ Biolabs is committed to delivering high-quality proteomics data through cutting-edge instrumentation and robust quality assurance systems, empowering researchers with dependable support for their scientific endeavors.

MtoZ Biolabs, an integrated chromatography and mass spectrometry (MS) services provider.

Related Services

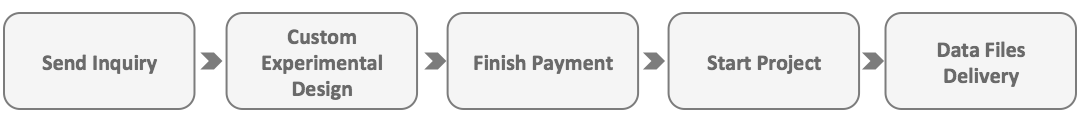

How to order?