Common Challenges in 4D DIA Data Processing and Practical Strategies for Resolution

With the increasing deployment of four-dimensional proteomics (4D proteomics) in biomarker discovery, drug development, and translational clinical studies, data-independent acquisition (DIA) has become the predominant quantitative acquisition strategy. 4D DIA, enabled by ion mobility (IM) separation, substantially enhances analytical sensitivity and proteome coverage. However, these benefits introduce additional demands for downstream data processing, interpretation, and quality control. The ability to correctly process, curate, and validate 4D DIA datasets directly determines the reliability of subsequent scientific conclusions.

Challenge 1: Excessive Background Noise and Interference Peaks Result in Poor Detection of Low-Abundance Proteins

1. Underlying Causes

Due to the use of wide precursor isolation windows, 4D DIA acquires large quantities of fragment ion information, which increases acquisition throughput but also introduces substantial background noise and interfering signals. For low-abundance samples such as serum or exosome-derived proteomes, these interferences significantly compromise peptide identification and protein detection rates.

2. Mitigation Strategies

(1) Optimization of Ion Mobility Separation Parameters: Adjust the resolution of trapped ion mobility spectrometry (TIMS) to leverage IM as the fourth dimension for background filtering.

(2) Construction of High-Quality Spectral Libraries: Generate project-specific libraries using deep DDA acquisition, supplemented by algorithmic predictions (e.g., DIA-NN or Spectronaut AI models) to compensate for peptides lacking empirical spectra.

(3) Post-Processing Noise Reduction: Apply stringent FDR control (<1%) and incorporate machine-learning-based peak recognition to suppress interference-derived identifications.

Challenge 2: Inconsistent Quantification Across Batches Hinders the Integration of Large-Scale Cohort Data

1. Underlying Causes

Label-free DIA quantification typically requires multiple instrument batches. Retention time (RT) drift, ion mobility fluctuations, and instrument performance variability induce quantitative inconsistencies, which are particularly problematic in clinical cohort analyses where batch effects exert disproportionate impact on downstream statistical inference.

2. Mitigation Strategies

(1) iRT-Based Temporal Alignment: Introduce iRT reference peptides into each batch and perform nonlinear regression alignment based on RT.

(2) Ion Mobility Drift Correction: Use internal reference peptides or endogenous background ions to normalize IM values.

(3) Batch Effect Modeling: Employ statistical correction methods (e.g., ComBat or RUV) to adjust systematic inter-batch differences.

(4) Cloud-Based Processing and Version Harmonization: Standardize software and algorithm versions across batches to avoid discrepancies arising from software updates or configuration differences.

Challenge 3: Large Data Footprint and Computational Demands Result in Slow Processing and Hardware Bottlenecks

1. Underlying Causes

Raw 4D DIA datasets frequently reach 20-50 GB per sample and contain millions of spectra. Stand-alone workstations are often insufficient due to constraints in memory, CPU/GPU resources, or I/O performance, resulting in extended computational turnaround times.

2. Mitigation Strategies

(1) Cloud Computing Acceleration: Offload raw data processing to high-performance clusters employing GPU-accelerated parallel computation.

(2) Lightweight Indexing and Spectral Prediction: Utilize compact predictive spectral libraries (e.g., DIA-NN) to reduce memory requirements and accelerate spectral matching.

(3) Workflow Automation: Implement automated pipelines for batch processing, including QC, FDR control, and normalization, thereby minimizing manual intervention and improving reproducibility.

Challenge 4: Poor Reproducibility in Differential Analysis and Difficulty Achieving Statistical Significance

1. Underlying Causes

Peptide-level quantitative data in DIA are strongly influenced by peak detection algorithms, normalization strategies, and missing value imputation approaches. Suboptimal handling of these steps leads to unstable differential protein identification and reduced statistical robustness.

2. Mitigation Strategies

(1) Consistent Peak Detection Algorithms: Prefer mature deep-learning-based platforms (e.g., DIA-NN or Spectronaut) to minimize cross-software discrepancies.

(2) Appropriate Normalization: Employ methods such as total ion current (TIC) normalization, median normalization, or internal reference protein scaling depending on dataset characteristics.

(3) Intelligent Missing Value Imputation: Avoid naive zero replacement and adopt probabilistic or KNN-based imputation strategies.

(4) Rigorous Statistical Validation: Apply multiple hypothesis testing corrections (e.g., Benjamini-Hochberg) to ensure robust false discovery control.

Challenge 5: Difficulties in Cross-Omics Integration with Transcriptomics and Metabolomics

1. Underlying Causes

Proteomics differs from other omics layers in data structure, identifiers, and normalization schemes. Direct integration typically results in dimensional mismatches and hampers cross-omics modeling.

2. Mitigation Strategies

(1) Data Harmonization: Standardize identifiers (e.g., Ensembl or UniProt) and transform intensities using Z-scores or log2 scaling.

(2) Cross-Omics Dimensionality Reduction: Apply PCA or O-PLS to facilitate pattern extraction and cross-modality integration.

(3) Functional Pathway Mapping: Conduct pathway annotation through KEGG or Reactome using a unified analytical framework.

(4) Joint Statistical Modeling: Utilize machine learning methods such as Random Forest or Elastic Net to identify key multi-omics features.

Although 4D DIA data processing is intrinsically complex, improvements in spectral library construction, batch correction, cloud acceleration, and standardized analytical workflows can substantially enhance data quality and research efficiency. For large cohorts and low-abundance biomarker studies, deploying mature analytical platforms significantly reduces experimental and statistical risks. Based on the Bruker timsTOF Pro and DIA-NN cloud platform, combined with proprietary batch correction and multi-omics integration pipelines, MtoZ Biolabs has delivered high-quality datasets for hundreds of cancer, immune, and metabolic disease-related projects, supporting researchers throughout the entire process from raw data to publication-ready results.

MtoZ Biolabs, an integrated chromatography and mass spectrometry (MS) services provider.

Related Services

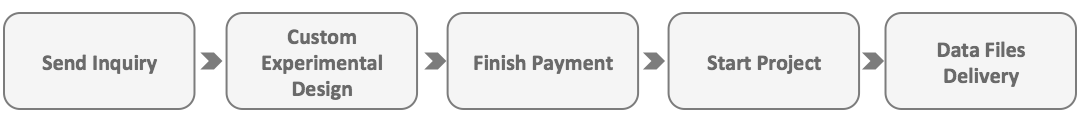

How to order?