How Can Effective Regression Be Achieved When Principal Component Analysis Yields Excessive Independent Variables?

When principal component analysis (PCA) yields a large number of components, the resulting increase in independent variables may hinder the implementation of regression analysis. The following strategies can be considered to address this issue:

Feature Selection

Post-PCA, it is advisable to retain only the most informative components or original features to reduce dimensionality. Techniques such as variance thresholding, correlation-based filtering, and recursive feature elimination can be employed to identify and retain representative features, thereby reducing the number of independent variables in the regression model.

Ridge Regression or LASSO Regression

These regularized regression approaches mitigate multicollinearity by imposing penalties on the magnitude of regression coefficients. In addition to stabilizing the model, they facilitate the automatic selection of relevant predictors, making them suitable for high-dimensional regression problems.

Principal Component Regression (PCR) and Partial Least Squares Regression (PLS)

PCR and PLS enable regression analysis based on transformed component spaces. PCR conducts regression on orthogonal principal components, whereas PLS incorporates both feature reduction and predictive modeling, preserving relevant variance and improving interpretability.

Alternative Dimensionality Reduction Methods

Beyond PCA, other techniques such as Independent Component Analysis (ICA) and Linear Discriminant Analysis (LDA) can be applied to reduce data dimensionality. These methods may offer advantages depending on the nature of the dataset and the specific objectives of the regression task.

Stratified Sampling

In cases of high-dimensional input, stratified sampling can partition the dataset into smaller, manageable subsets. Regression models can then be fitted to each subset individually, and their outputs subsequently aggregated to construct a comprehensive global model.

Data Preprocessing Techniques

Prior to PCA, applying preprocessing steps such as standardization or normalization can help harmonize variable scales and distributions. This facilitates more effective dimensionality reduction and improves the subsequent performance of regression analysis.

By applying the strategies above, the challenges posed by a high number of predictors following PCA can be effectively mitigated, enabling robust and interpretable regression modeling. The choice of method should be guided by the characteristics of the dataset and the specific goals of the research.

MtoZ Biolabs, an integrated chromatography and mass spectrometry (MS) services provider.

Related Services

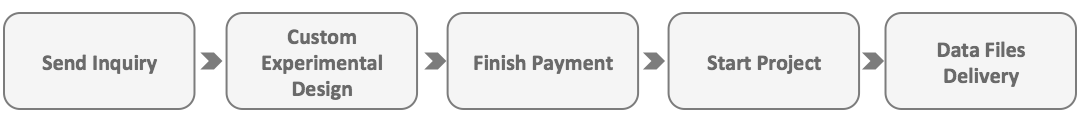

How to order?