Error Analysis in Proteomics Data Assessment

With the continued advancement of technologies, acquiring proteomics data has become more efficient and accessible. However, due to the inherent complexity of proteomics data and the limitations of current technologies, errors can arise during data evaluation. These errors may affect the accuracy and reliability of experimental outcomes.

Sources of Error in Proteomics Data Evaluation

1. Sample Preparation

Sample preparation is a critical foundational step in proteomics research, as it directly affects the quality and reliability of the final data. The complexity of biological samples and the methods used to process them can introduce significant variability. For instance, during processes such as cell lysis, protein fractionation, or enrichment, certain proteins may degrade or be lost, leading to incomplete or biased data. Additionally, contaminants from laboratory environments or instruments may be introduced, resulting in erroneous protein identifications, which can complicate subsequent analyses.

2. Protein Identification and Quantification

Mass spectrometry (MS) is the most widely used technique for protein identification and quantification in proteomics. Despite its utility, MS is not immune to errors. Interference from background noise, signal overlap, or mass inaccuracies can lead to misidentification of proteins. Moreover, due to the wide dynamic range of protein abundance in biological samples, low-abundance proteins are often missed because of insufficient detection sensitivity, leading to under-representation in quantification results. These limitations can skew the overall interpretation of the proteome.

3. Data Processing and Analysis

The complexity of data processing in proteomics adds another layer of potential error. Sophisticated algorithms and software tools are used to interpret mass spectrometry data by matching it to protein databases, but inaccuracies in this process can lead to systematic errors. For instance, selecting an inappropriate database or using suboptimal search parameters can result in false positives or missed identifications of critical proteins. Furthermore, different software platforms may yield varying results for the same dataset due to differences in computational algorithms, exacerbating uncertainty in the analysis.

4. Statistical Analysis and Result Interpretation

Statistical analysis plays a central role in evaluating the significance of proteomics findings. However, the selection of statistical methods can greatly influence the final results. Using incorrect statistical models or setting inappropriate thresholds for significance can lead to false positives or false negatives, impacting the study's conclusions. Even with identical datasets, researchers may reach different conclusions based on their choice of statistical tools. Moreover, human interpretation of results, especially when predicting protein functions or drawing biological inferences, can introduce subjective biases that further complicate the evaluation process.

Importance and Challenges of Error Analysis in Proteomics

1. Importance

Conducting error analysis in proteomics is essential for improving the accuracy and reliability of experimental data. By identifying the sources of errors, researchers can refine experimental protocols, implement stricter controls during sample preparation, and enhance data processing methods. For instance, standardizing workflows can reduce contamination risks and protein loss, leading to more robust datasets. In mass spectrometry, advances in instrument sensitivity and resolution can improve the detection of low-abundance proteins, making the proteomic analysis more comprehensive. Moreover, improved statistical approaches can help eliminate false positives and negatives, thereby increasing the confidence in the biological relevance of the findings.

2. Challenges

Error analysis also has certain challenges. The multifaceted nature of errors in proteomics makes it difficult to fully eliminate them. Biological heterogeneity, for instance, can lead to inconsistent results, even under tightly controlled experimental conditions. Additionally, current computational and analytical tools are not yet capable of capturing all potential errors with high precision. Some errors, particularly those arising from complex biological systems, may remain undetectable or be too subtle to fully account for. Finally, error analysis can be resource-intensive, requiring significant time and computational power, especially for large-scale proteomics projects. This can be a major hurdle for researchers, particularly those working with limited resources.

By understanding the sources of errors, researchers can continually refine experimental designs and data analysis techniques, thus improving the overall quality of proteomics research. Through ongoing improvements, proteomics will continue to play a transformative role in advancing our understanding of biological systems.

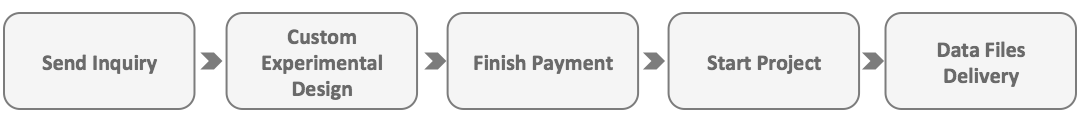

How to order?