Bottom-Up Proteomics Data Analysis Pipeline

-

DDA (Data-Dependent Acquisition): Selects the top N precursor ions based on signal intensity for fragmentation.

-

DIA (Data-Independent Acquisition): Systematically fragments all ions across predefined m/z ranges, allowing for comprehensive and reproducible quantification in large-scale studies.

-

Label-Free: No labeling required; suitable for large cohorts.

-

Isobaric Tagging (TMT/iTRAQ): Enables multiplexed quantification across samples.

-

DIA-Based Quantification: Leverages spectral libraries for improved accuracy and depth.

-

Missing Value Handling: Use imputation methods (e.g., KNN, random forest) or apply filtering strategies.

-

Inter-Sample Consistency Checks: Compute Pearson correlation coefficients to identify outlier samples.

-

PCA (Principal Component Analysis): Assess batch effects and sample clustering trends.

Bottom-up proteomics involves the enzymatic digestion of proteins into peptides, followed by identification and quantification through mass spectrometry, ultimately enabling the inference of protein identities and abundances. Owing to its high throughput, sensitivity, and adaptability, this approach is widely employed in biomarker discovery, disease mechanism elucidation, and drug development. Below is a standardized bottom-up proteomics data analysis pipeline.

Sample Preparation and Protein Digestion

The initial step in bottom-up proteomics involves protein extraction and enzymatic digestion. Depending on the sample type, tissues, cells, or biofluids, suitable lysis methods and buffers should be employed to ensure efficient extraction while preserving native post-translational modifications (PTMs). Protein concentrations are then quantified using BCA or Bradford assays, and quality is preliminarily evaluated via SDS-PAGE. Trypsin is typically used for specific proteolytic digestion to generate peptides compatible with mass spectrometry. If needed, additional enzymes such as Lys-C or Glu-C may be applied to enhance sequence coverage.

1. Protein Extraction

Appropriate lysis buffers and methods (e.g., sonication, heating, or mechanical disruption) should be selected based on tissue or cell type to maximize extraction efficiency and maintain protein integrity.

2. Protein Quantification and Quality Control

Protein concentrations are measured using BCA or Bradford assays, and SDS-PAGE is used for preliminary assessment of protein quality.

3. Proteolytic Digestion (Typically Using Trypsin)

Proteins are enzymatically cleaved into peptides, generating complex peptide mixtures amenable to downstream mass spectrometry analysis.

LC-MS/MS Analysis

Peptides are first separated by high-performance liquid chromatography (HPLC), then subjected to MS1 and MS2 scanning within the mass spectrometer. Widely used high-resolution instruments, such as the Orbitrap Exploris (Thermo), timsTOF Pro (Bruker), and TripleTOF (Sciex), enable rapid and accurate acquisition suitable for diverse research scenarios. Two primary acquisition strategies are commonly employed:

MtoZ Biolabs utilizes cutting-edge instruments such as the Orbitrap Exploris and timsTOF, along with high-precision nanoLC systems, to deliver high coverage and reproducibility for in-depth analysis of complex biological samples.

Raw Data Processing

1. Data Format Conversion

Mass spectrometers from different vendors output distinct file formats, e.g., .raw (Thermo) or .wiff (Sciex), which must be converted to standardized formats such as .mzML or .mgf for downstream processing using either open-source or commercial software.

2. Peptide Identification

Following conversion, search engines (e.g., MaxQuant, MSFragger, Proteome Discoverer) are used to match MS/MS spectra against reference protein databases to identify peptide sequences and their modifications. A false discovery rate (FDR), typically set at 1%, is applied to control identification confidence.

3. Protein Inference and Quantification

Multiple peptides originating from the same protein are integrated to infer the presence and relative abundance of proteins. Quantification strategies include:

Data Quality Control and Normalization

To ensure analytical accuracy and reproducibility, comprehensive quality control procedures must be applied:

Differential Expression Analysis

Upon data normalization, differential expression analysis can be conducted using statistical methods such as t-tests, ANOVA, or linear model-based approaches (e.g., limma) to identify proteins with significant abundance changes under different conditions. Common selection criteria include |log₂ fold change| > 1 and p-value < 0.05. Visualization tools such as volcano plots, heatmaps, and boxplots are employed to illustrate expression patterns and group-specific features.

Functional Enrichment and Pathway Analysis

Identified differentially expressed proteins require further biological interpretation. Functional annotation and enrichment analyses using databases such as Gene Ontology (GO), KEGG, and Reactome reveal involved biological processes, molecular functions, and signaling pathways. Protein–protein interaction (PPI) networks can be constructed using the STRING database to identify key regulators (hub proteins), which can then be visualized and analyzed using Cytoscape.

The bottom-up proteomics data analysis pipeline encompasses the entire process from sample to biological insight. Each stage’s optimization directly influences data quality and reliability. Leveraging MtoZ Biolabs' integrated strengths in sample processing, mass spectrometry, and bioinformatics, researchers can focus on scientific questions rather than technical complexities. For tailored, high-throughput, and high-accuracy proteomics solutions, feel free to contact us.

MtoZ Biolabs, an integrated chromatography and mass spectrometry (MS) services provider.

Related Services

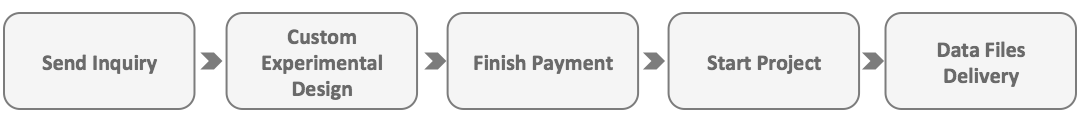

How to order?